Foundation Models

Scale enables a powerful generalist

Welcome to Archived - the chronicles of a VC attempting to decode innovation in technologies, markets and business models. If you enjoy this post, you can subscribe👇 or catch me at ruth@frontline.vc for a chat!

The term Foundation Model was coined by the Stanford Center for Research on Foundation Models (CRFM) to encapsulate the pivotal (and unfinished) role these AI models (eg. GPT-3, DALL-E) are anticipated to play across a broad range of applications in the coming years. If foundation models truly are commensurate to major technological paradigm shifts like Mobile and Cloud, it’s worth taking a step back to gain a clear understanding of how these things work under the hood.

Stanford defines foundation models as:

Models trained on broad data (generally using self-supervision at scale) that can be adapted (fine-tuned) to a wide range of downstream tasks

The <b> words are the building blocks that underpin foundation models (FMs). We’ll step through each of these terms to decode the complexity of this technology.

MODELS

‘Models’ in this context refers to Deep Neural Networks (DNNs) and more specifically, a type of DNN called a Transformer. First, I’m going to explain how a plain vanilla DNN works and then I’ll tackle the specifics of the Transformer architecture.

‘PLAIN VANILLA’ FEED-FORWARD NEURAL NETWORK (FFNN)

Neural networks broadly mimic the structure of the brain - they have neurons, connectors, and like onions & ogres (iykyk), they have layers! The best way to understand neural nets is through an example — so let’s take the canonical one of a network that learns to recognize hand-written digits. Shout out to 3blue1brown for doing most of the heavy lifting here!

First up, let’s look at the structure of the network:

Input Image: The network is trained on a bunch of hand-written digits. The MNIST database provides a collection of 60k+ examples of digits from 0 → 9, making the ideal training data for this use case. Each image is made up of 784 pixels (28x28) and each of these pixels becomes a neuron in the input layer of the network.

The Input Layer: Think of a neuron as ‘a thing that holds a number’ between 0 → 1. The closer the number is to 1, the more ‘active’ the neuron. You might ask, well, how do these numbers get assigned? In this example, the number represents the greyscale value of the pixel, so a black pixel = 0, a white pixel = 1 and so on.

The Hidden Layers: If each neuron in layer 1 represents a pixel, think of each neuron in layer 2 as representing the edge of a number, and each neuron in layer 3 as representing a line or loop of a number. Activations in one layer determine activations in the next through the dark arts of weights (aka parameters) and biases. Each connector (ie. the grey lines) in the network is assigned a weight. Think of the weight as the strength of the connection .

The Output Layer: The final layer of the network consists of 10 neurons each representing a single digit. The most active neuron in this last layer is basically the network’s choice of what the input image actually is (in this case, a 3).

Next up, the ‘learning’

Learning = Finding the right weights & biases that minimize the cost function

So how does the network actually ‘learn’? At the outset of training, weights & biases are just randomly assigned numbers. As you can imagine, the network performs pretty poorly as a result. This is where the cost function comes into play. The cost function is the difference between the network’s prediction, and the choice we expect (ie. a 3). The ‘cost’ measures how badly the network is performing — so naturally we want to minimize this cost to make the network useful. Minimizing the cost is done using Gradient Descent — for now, just know that this is an algorithm that helps you find a “local minimum” (we’ll tackle this another time!).

***Side Note: I find the concept of deep learning easier to comprehend through the lens of computer vision, which is why I used the image recognition example above. Foundation models however, have primarily taken shape thus far in NLP so I’ll focus the rest of the post on language use cases.***

EVOLUTION OF NLP

Although FFNNs perform well on pattern recognition problems (such as spam detection or number recognition), their lack of ‘memory’ about the inputs they receive means they perform poorly on any type of sequential data (eg. time series, speech, text).

Enter Recurrent Neural Networks (RNNs)!

The most important thing to remember about RNNs is that they process data sequentially - words are fed in one at a time and the network holds information about prior words in memory.

Although this structure enabled progress in NLP, it’s impact was limited due to two key constraints:

Short-Term Memory: Similar to Dory from Finding Nemo, RNNs have short-term memory — they tend to forget the beginning of a sentence by the time they reach the end. In the above example, the RNN has essentially forgotten the words ‘what’ and ‘time’ as it approaches the end of the sentence. It must try guess the next word based on the context ‘is it’ — this is a challenging task!

Parallelization Issues: RNNs sequential architecture requires the network to compute the memory of the word ‘what’ before it can encode the word ‘time’. The challenge here is one of hardware — RNNs don’t parallelize well (ie. you can’t run them on a million servers at once so they’re slow to train).

TRANSFORMERS

Transformers entered the scene in 2017. This new type of neural network architecture, proposed via Google’s ‘Attention is all you Need’ paper, quite literally changed the game. Transformers brought two key innovations from its predecessor (RNNs):

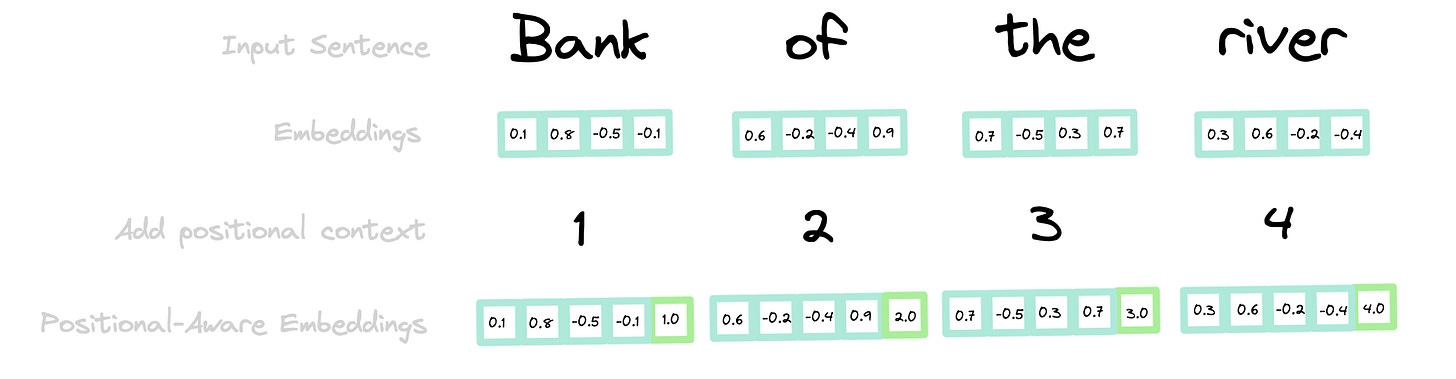

Positional Encodings: Transformers feed all input words into the network at once. This solves the parallelization problem but raises the question of how the network will remember word order. This is done via positional encodings. Put simply, before feeding a bunch of text into the network, you stick a number on each word embedding — in this way, word order is stored in the data itself.

Self-Attention: Transformers have the ability to pay attention to the most important parts of an input, in the same way we as humans do! For example, when you read the sentence below, you understand that we’re not referring to a bank containing $$, rather the bank of a river. Self-attention enables machines to understand the correlation between similar words and come to the same conclusion.

But again, how does this learning actually occur? Let’s walk through it using this sentence as an example:

Step 1 — Create Word Embeddings: Neural Networks don’t understand text, but they do understand numbers. Word embeddings are a means of converting words into vectors (ie. an array of numbers) such that similar words are close to each other in the embedding space. Words are similar if they tend to be used in similar contexts (eg. king and queen are similar as they are both used around other words such as crown, royalty etc).

Step 2 — Add Positional Context: As we explained above, we then need to slap a number on each vector so that the system can remember the word’s position in the sentence.

Step 3 — Extract Features with High Attention: In keeping with the culture of the ML ecosystem, there are three cryptic terms used to describe the basis of how the system learns what to pay attention to — query, key and value. The best analogy for this trio is to think of a retrieval system like YouTube. When you search something on YouTube, the engine maps your input in the search bar (ie. the query) against a set of dimensions such as video titles (ie. keys) and presents you with the best matched videos (ie. values). Applying this to our example, we’re trying to add more context to ‘banks’ word embedding. To do this, we map bank (ie. the query) against all the other words in the sentence (ie. the keys) using some math that we won’t get into [dot product, scaling, normalizing]. The resulting outcome is a heat map, where ‘similar words’ have higher weightings/attention.

Ok, so now that we’ve established the architecture of a foundation model, let’s move onto how these things are trained— via broad data and self-supervision.

SELF-SUPERVISION ON BROAD DATA

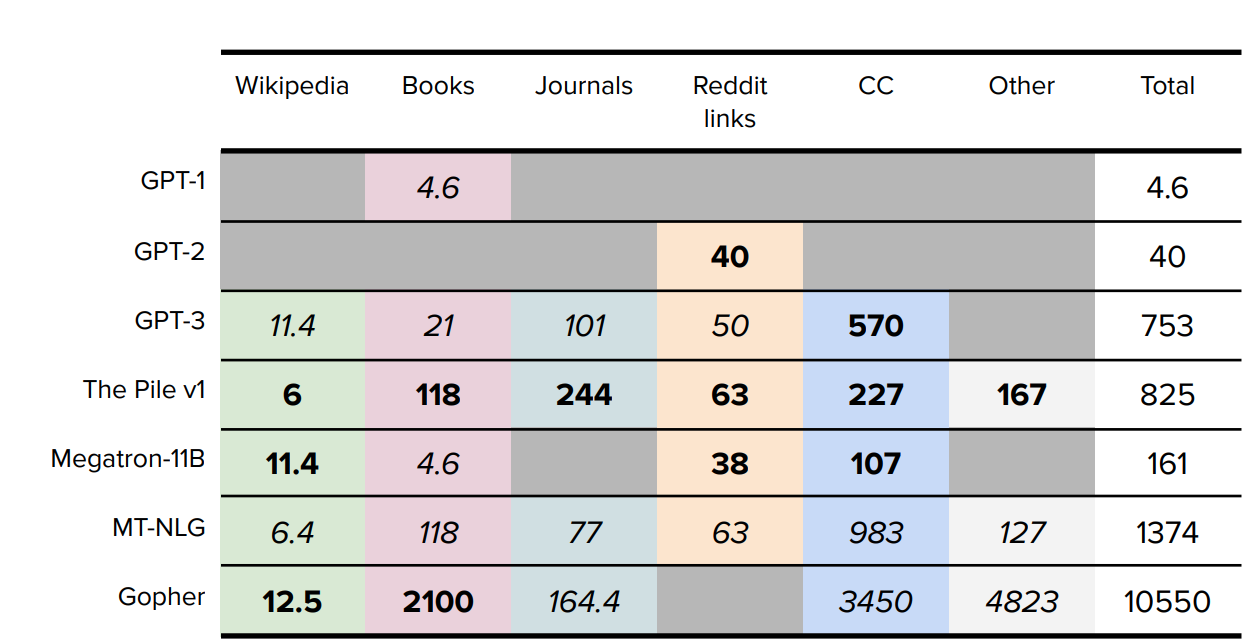

FMs are fed data, and therefore learn from, a little thing we call the world wide web — this includes everything from Wikipedia and Online Books to Reddit etc (CC stands for common crawl).

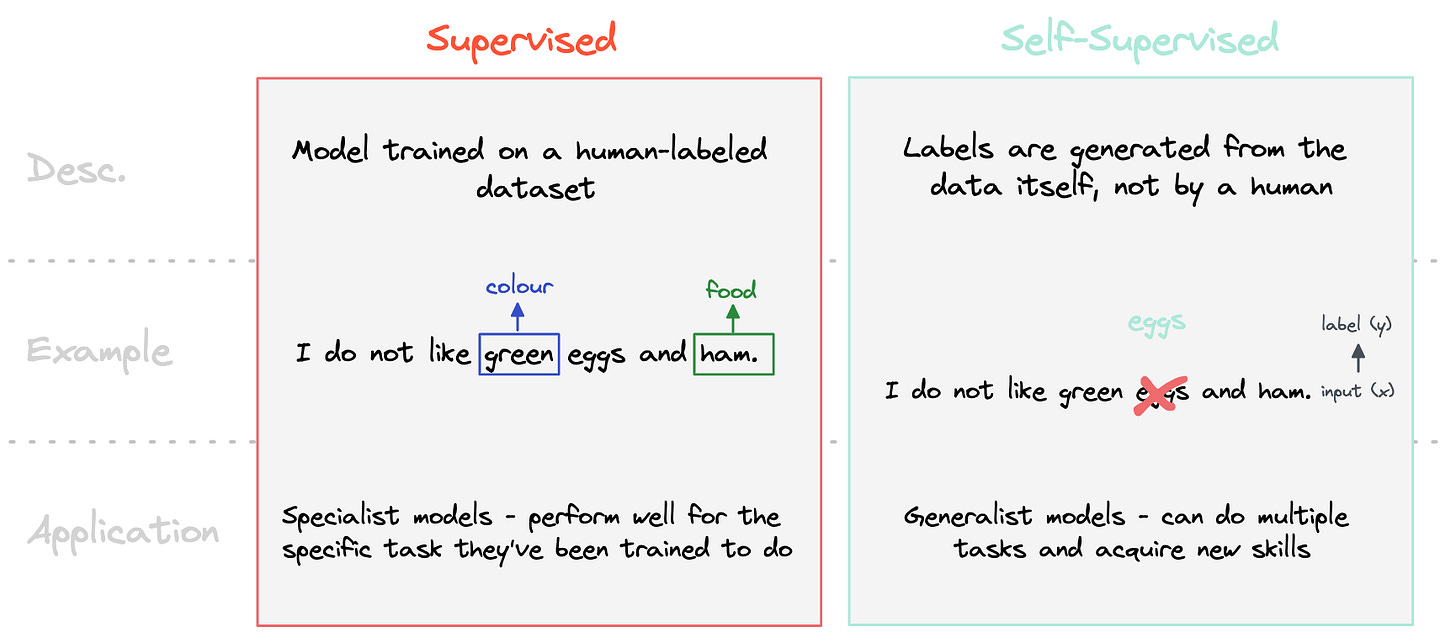

FMs are generally trained in a self-supervised way, meaning that labels are generated from the input data itself, not from human annotators. In the example below, “I do not like green ____ and ham” becomes the input (x) to the network and “eggs”, the network’s prediction, becomes the label (y). This contrasts to supervised learning where each word is manually labelled by a human based on category (eg. green = color, ham = food) or grammatical form (eg. noun, adjective).

Supervised learning has dominated over the past decade with entire industries popping up to help with the gargantuan task of labelling the worlds data (Scale AI, Snorkel). However, labeling data is a costly affair and challenging to scale. Supervised models are also specialists. They get an A+ for the specific job they were trained to do but often fail on inputs that are even marginally out of context.

Let’s think about how children learn for a moment. Don’t you think it’s strange that my 2 year old nephew can recognize a cow in pretty much any scenario having only seen a few farm animal books, yet a neural network using supervised learning needs to see thousands of examples of cows and may still struggle to identify this one based on its unusual setting?

This is because humans rely on background knowledge they’ve built up about the world through observation — a.k.a common sense. Self-supervised learning is an attempt at replicating this phenomenon in machines. The emergence of BERT and GPT-2 in 2018/19 brought with it a resurgence of interest in self-supervised learning. The impact of this was twofold:

Scale: Models could be trained on huge unlabeled datasets thus beginning the journey of ‘learning the internet’.

Generalizability: Because data labels aren’t explicitly provided, the model learns subtle patterns behind the data and can be more easily applied to similar downstream tasks.

Now we know both the architecture of a FM and how these things are trained. The last piece of the puzzle is understanding how these models are adapted to downstream tasks.

TRANSFER LEARNING

Transfer learning does what it says on the tin — it involves taking the ‘knowledge’ a model has learned from one task (eg. classifying cars) and transferring it to another (eg. classifying trucks). The traditional method of transfer learning is fine-tuning.

Fine-tuning makes most sense to employ when:

You have a lack of data for your target task

Low level features from the pre-trained model are helpful for learning your target task

Ok, so we’ve now unpacked the technological building blocks of FMs:

1) Transfer Learning, 2) Self-Supervised Learning and 3) the Transformer Architecture, all of which enabled these powerful AI models to come into fruition.

Interestingly, none of these methods are new. In fact, they’ve been around for decades. So what was the true innovation of GPT-3 that kicked off the era of FMs?

The secret sauce was actually scale and the infrastructure that enabled this (an ode to MLOps!)

The release of GPT-3 brought with it a new phenomenon — the emergence of in-context learning. The term emergence means ‘more is different’ - that is, quantitative changes (ie. ‘more’) can lead to unexpected phenomena (ie. ‘different’). In the case of Machine Learning, the sheer scale of GPT-3 at 175bn parameters (‘more’), led to the emergence of in-context learning (‘different’).

In-context learning removes the need for a large labelled target dataset as well as the process of fine-tuning (updating the weights) which saves both time + $$. The model can be adapted to downstream tasks by providing it with a description of the task, a prompt and some examples (‘shots’) in the case of one or few-shot learning.

It’s worth taking a moment to really internalize what’s happening here —

the model can complete a task that it’s never been explicitly trained on

At first blush, this seems pretty magical — and to some extent, it is. Unpacking how this occurs however, helps us shed some light on this mysterious concept. What you’re basically hoping for, is that somewhere on the internet (and thus in your training data), the model has seen the word structure ‘translate German to English’ and ‘rot = red’. A quick Google search shows that the model could have easily picked this structure up from multiple different sites whilst doing its common crawl of the web.

This highlights an ostensibly obvious but nevertheless important fact:

In order for a model to generalize well to a specific downstream task, data relating to that task must be included in the training dataset!

So if scale was the fundamental unlock for foundation models, why was there a lag between GPT-3’s release (June 2020) and the now ubiquitous ‘AI boom’. The answer lies in accessibility. In recent years, there has been a steady democratization of access to state-of-the-art models enabled by a progression in the model interface. Models that were historically reserved for academia, can now be accessed by the general public via an approachable user interface.

** Technical explanation = fin! If you’ve made it this far, I salute you 🫡 and hope that this post helped demystify some of the technical jargon associated with FMs!

ARE FOUNDATION MODELS REALLY ‘ALL YOU NEED’?

Foundation Models have undoubtedly opened up the floodgates for ML adoption and their existence raises the question as to whether any company would want to build their own models in the future. This is an area I’ve spent some time pressure testing.

There are both offensive and defensive factors that I believe will continue to drive some Enterprises towards a ‘BYOM’ (build your own model) approach.

Proprietary Data: FMs have built up an immense knowledge of the internet — that is, all publicly available datasets. What they haven’t been trained on however, is the billions of proprietary Enterprise datasets that exist behind closed doors. What about fine-tuning you might ask? As highlighted above, fine-tuning makes most sense when you have a lack of data for your domain-specific task and when the statistics of the pre-trained model are similar to your target task. For many companies however, a lack of data is certainly not the problem and often FMs won’t generalize well to domain-specific use cases.

Hallucinations: LLMs do a fantastic job of impressing (and fooling) the human ‘right brain’ (our creative/intuitive side) by generating content that is extremely plausible at first glance. However, we shouldn’t confuse syntax proficiency with content rooted in fact. LLMs, in short, use probabilistic next-word prediction based on an ingested corpus of the internet. Although these models have an exceptionally large memory, their ability to generate untruthful content means in their current state, these systems shouldn’t be deployed (off-the-shelf) in areas where there is a definitive right answer, and in particular where the risk associated with giving the wrong answer is high — take Sam Altman’s word for it!

Data Provenance & Privacy: The history of Machine Learning is a story of both emergence and homogenization (ie. the consolidation of methods). Deep Learning brought about the homogenization of model architectures (Neural Nets, Transformers etc) and now FMs are introducing homogenization of the model itself. FMs are thus a double-edged sword — on one hand, improvements lead to widespread immediate benefits — on the other hand, defects are inherited by all downstream models thus amplifying intrinsic biases. This issue presents a significant barrier to adoption for companies in regulated industries such as Banking, Healthcare, Legal — where data provenance is critical.

Squeezing Margins & Latency Issues: Where FMs are implemented in customer-facing use cases, the cost of running inference through FM providers like OpenAI becomes prohibitively expensive at scale, squeezing margins of the businesses building on top of them. In a similar vein, bigger is not always better with respect to model size where latency is concerned. For example, increasing the size of a code completion model may improve performance but if it’s too slow to provide the code suggestions as the user is typing, the product’s value is questionable.

For these reasons, I think it’s clear that Foundation Models are not the only thing we need! They represent a critical piece of the puzzle but not the whole picture. I expect a hybrid world to emerge, where we’ll see a mix of companies building their own models and leveraging FMs. As a VC, I’m excited about companies that are lowering the barriers to entry on both sides (shout out to MosaicML, a Frontline portfolio company, that’s leading the way on the BYOM side!). This layer of ‘picks and shovels’ within the AI stack, will unlock exponential progress and an abundance of intelligent applications in the coming years - a future I’m undeniably excited about!

great coverage, thank you!